This is one of favorite Blog post i have submitted in my old blog "jehanzebahsan.wordpress.com" . quoting the same Text Here

----------------------------------------------------------------------------------------------------------------------------------------------

A few days ago i wrote an email regarding “enq: TX – allocate ITL entry” seen on one of our production databases to my development team. i just pasted that here in the post

Issue:

The issue we have faced on a DB was extensive locking on the TBL_jehanzeb (an arbitrary name used here) table. The specific wait events we have observed on the database were “enq: TX – allocate ITL entry”

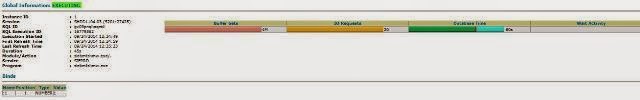

Our EM grid control was showing some horrific hills; and we were especially concerned about the brown ones ( the configuration waits).. As a DBA i have destroyed my aesthetics ; because i cant admire such a beautiful blend of colors on the EM GRID control .

Analysis:

On the particular day the traffic on the database was very high raising the Transactions per seconds to 90k ( which on average is 40-50k )

The Particular wait event we faced on this table explains the situation as following.

This is the snapshot of an Oracle Block. With the value of PCTFREE=10 in the TBL_jehanzeb table

The oracle block Header the top part shown in the figure contains the ITL ( interested transaction list) i-e a linked link where each node represents the a single interested transaction. As the number of interested transaction (especially updates) grow the header starts expanding downwards and the row data starts expanding upwards until there’s a situation shown in the figure below

There is no more room in the block for a new ITL entry. A new transaction comes in to update Record3. The transaction will have to wait. This is not the same wait as a row lock; because there is no lock on the row marked Record3.Instead, session will wait on a special wait event known as the “enq: TX – allocate ITL entry”. The moment one of the transactions – from either Session1 or Session2 end by commit or rollback, the new transaction can grab that ITL slot and complete the locking operation. Until then the session3 will acquire a block level exclusive lock.

The V$SEGMENT_STATSTICS shows the significantly high ITL waits on the table TBL_jehanzeb as compared to the closest competitors . (Rest of the table names omitted using the renowned MS paint again for the INFO. SECUrity reasons)

Solution:

- Change the application design to avoid this bone of contention by reducing the number of hits on the table TBL_jehanzeb

- Increase the PCTFREE and INITTRANS parameter as the database level for this particular table.

- alter table <name> pctfree 50;

- alter table <name> initrans 100